- TIPS & TRICKS/

- AI Investing: The Future of Personal Wealth/

AI Investing: The Future of Personal Wealth

- TIPS & TRICKS/

- AI Investing: The Future of Personal Wealth/

AI Investing: The Future of Personal Wealth

“AI investing” is everywhere right now – and often means wildly different things. People mix up at least three ideas:

- buying shares in AI companies and “AI stocks”

- using AI‑powered tools to help you invest

- supposed AI “trading bots” that promise guaranteed returns and are frequently scams

This article is about the second: AI as a practical tool inside the investment process, not a shortcut to easy money. For our purposes, AI investing means using automation, machine learning and language models at different stages of how money is managed – from research and portfolio construction to trading, monitoring and reporting.

We will look at three main groups of tools:

- robo‑advisers and digital portfolio platforms

- algorithmic trading apps and systematic strategies

- AI‑powered research and portfolio “co‑pilots” used by advisers and institutions

The approach throughout is deliberately balanced. AI can bring real advantages in speed, scale and personalisation, and can strengthen risk and compliance. At the same time, AI also introduces familiar and durable hazards: hype, opacity, weak controls and outright fraud – from “AI‑washed” products that barely use machine learning at all, to unauthorised schemes like “Microsoft AI Invest” flagged on the FCA Warning List.

This is not personalised advice or a list of hot stocks. It is a sceptical guide to how AI‑enabled investing actually works – and how to tell serious, well‑governed providers from marketing spin.

What “AI investing” really means today

When people talk about “AI investing”, they often picture a single clever system that finds winning trades on its own. In reality, AI shows up as a set of tools plugged into different steps of the investment process, used by both individuals and institutions to make existing workflows faster, cheaper and more consistent. As the CFA Institute puts it, AI in asset management is essentially “another form of modelling”, layered on top of established investment principles rather than replacing them outright.

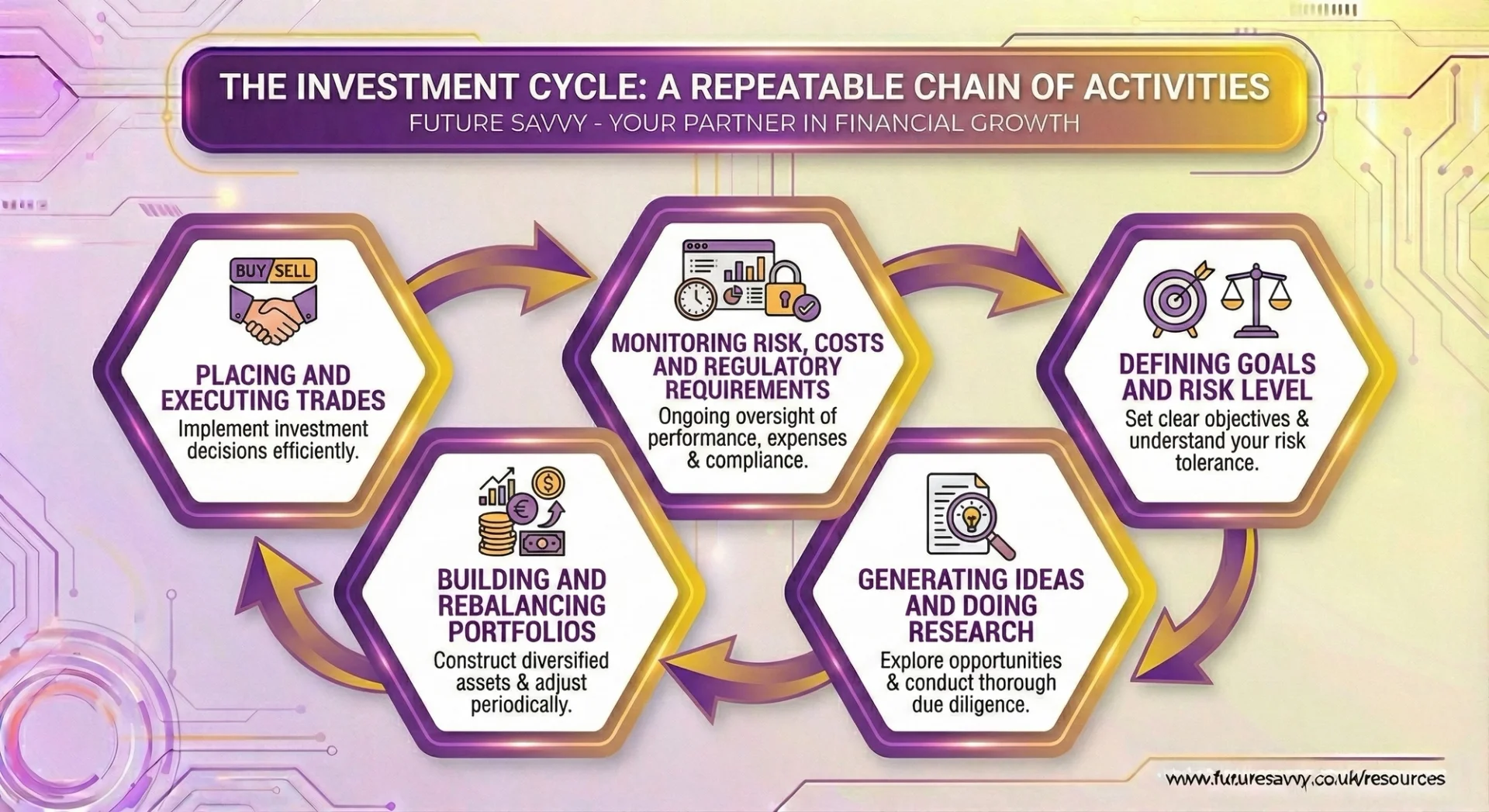

The investment value chain and where AI fits

AI does not replace this chain with a magic box. Instead, different systems support specific links: chatbots help with research, optimisation tools support portfolio design, execution algorithms handle trading, and monitoring tools track risk and compliance.

In practice, large managers increasingly use machine‑learning models to extract signals from big data, while relying on traditional optimisation for risk control and implementation, a pattern documented in the CFA Institute’s work on AI and big data in investments. The common pattern is automation and decision support, with humans still setting objectives and making final calls.

Robo‑advisers and digital portfolio platforms

For individuals, the most visible form of AI investing is the robo‑adviser or digital wealth platform. In practice, they:

- Start with an online questionnaire to gauge your goals, time horizon and risk tolerance

- Map your answers to a model portfolio, usually built from low‑cost index funds and ETFs

- Automatically rebalance when markets move, and in some markets realise tax losses within set rules

Newer platforms are adding generative AI layers: plain‑English explanations of why your portfolio looks the way it does, Q&A assistants that pull from research tied to the funds you hold, and clearer visualisations of diversification and downside risk.

Providers such as Morningstar are already embedding their data and ratings into tools like Microsoft Copilot so advisers can generate portfolio analytics and client reports directly inside office software, showing how trusted data plus AI interfaces are becoming part of everyday wealth management workflows.

The key point: the “AI” mostly sits in the recommendation engine, communication layer and operational plumbing. The underlying investments are typically standard building blocks, not secret, high‑octane trades. That distinction also matters from a regulatory angle: the UK FCA has repeatedly stressed that firms remain fully accountable for suitability and Consumer Duty outcomes, even when they deploy AI in advice journeys or portfolio engines, a theme running through its guidance on AI and financial regulation.

Algorithmic trading apps and systematic strategies

Another strand of AI investing is algorithmic trading. Here it is vital to separate:

- Retail “AI trading apps”, often marketed as effortless profit engines

- Institutional systematic strategies, which are rules‑based and tightly risk‑controlled

In practice, institutions use:

- Execution algorithms that slice large orders into smaller pieces to reduce market impact and trading costs

- Short‑term trading rules based on price, volume and news flow

- Machine‑learning models that digest alternative data (such as web traffic or satellite images) on multi‑day or multi‑week horizons

High‑frequency trading, where speed is paramount, is only one niche. Much of the industry focuses less on raw speed and more on robust data science, model validation and governance. A recent Business Insider review of leading hedge funds notes that firms like Citadel, Two Sigma and Man Group now treat AI as core research and execution infrastructure, but still keep portfolio managers and risk committees firmly in charge of capital allocation, underscoring that humans, not machines, are the edge.

Regulators echo this: the FCA’s market‑oversight team has been clear that “a machine may not always be able to recognise behaviour an analyst would know is deceptive or wrong”, which is why strong human oversight is non‑negotiable in any AI‑driven trading stack.

The aim is to apply consistent rules to noisy markets, not to gamble on opaque black boxes. That is also why professional bodies are warning about “AI‑washing”: the CFA Institute has highlighted cases where firms badge simple rules‑based systems or chat interfaces as “AI funds” without any meaningful machine learning in the core investment engine, and urges investors to scrutinise how models are actually used in decision‑making.

AI‑powered research and portfolio co‑pilots

A newer wave of tools acts as “co‑pilots” for analysts, advisers and, increasingly, advanced individual investors. These systems:

- Provide chatbot interfaces over research libraries, company filings and earnings calls

- Offer screeners that blend fundamentals with sentiment and sustainability metrics

- Analyse portfolios to highlight factor tilts, concentration risks and “what‑if” scenarios

Crucially, these co‑pilots accelerate reading, screening and scenario analysis rather than placing trades on their own. They help surface questions and patterns that humans might miss or take days to find, but they still sit within governed workflows where people remain accountable.

This mirrors what is happening inside large asset managers, where machine‑learning models are used to pre‑screen ideas and summarise documents while investment committees still make final calls, a pattern documented across multiple case studies in the CFA Institute’s AI in asset management content collection.

As one practitioner quoted by the Institute puts it, “AlphaGPT does not replace human judgment but amplifies it” – a concise summary of how serious firms view AI in the investment process.

In summary, AI investing today is best understood as a toolkit across the value chain:

- Automation of routine processes

- Faster, broader research and screening

- Clearer, more tailored explanations and risk views

The promise lies in augmenting human judgement with scalable, auditable systems -not in surrendering control to an inscrutable algorithm.

How AI investing tools work in practice – and what they are good at

Data as the starting point

Every AI investing tool, from a simple robo‑adviser app to a hedge fund’s research engine, starts with data. In practice, that usually means four main streams:

- Market data: live and historical prices, trading volumes, order books and execution details. These feed trading algos, risk models and portfolio analytics.

- Fundamental data: company accounts, analyst estimates, sector metrics and macroeconomic indicators that underpin longer‑term decisions.

- Text data: news articles, earnings transcripts, regulatory filings, broker notes and sometimes social media, used to gauge sentiment and detect new information.

- Alternative data (mainly professional): anonymised card‑spend trends, web traffic, app usage and even satellite images to infer activity before it shows up in official numbers.

The core point is that AI is only as useful as the **quality, coverage and governance** of this data. Well‑designed platforms focus heavily on cleaning, validating and tracing data, and on respecting licences and privacy rules, long before any model is switched on.

As the Handbook of AI and Big Data Applications in Investments stresses, much of the edge in practice comes from “data cleaning, joining datasets, and governance” rather than flashy algorithms alone, a view echoed by large managers such as Morningstar in their work to make “trusted, independent data and insights” AI‑ready inside tools like Microsoft Copilot.

Interested to learn more about how Microsoft Copilot functions? Read our comprehensive guide here.

Modular models, not one all‑knowing brain

AI investing is not run by a single super‑intelligent system. Instead, firms combine different models, each doing a specific job:

- Classification models tag information – for example, sorting news as positive, negative or neutral for a given company, or flagging which themes an earnings call covers.

- Forecasting models estimate the odds of different outcomes, such as the probability a company will cut its dividend, or how likely a portfolio is to breach a risk limit.

- Portfolio optimisation modelshelp balance risk and return under real‑world constraints like position limits, liquidity, tax rules or client mandates.

- Natural language tools summarise research, explain portfolio changes, or answer “why did my portfolio move today?” in plain language.

In professional settings this modular approach is now standard. Large hedge funds and systematic managers described in Business Insider’s coverage of firms such as Citadel, Two Sigma and Man Group use separate engines for signal extraction, risk and execution, and then layer research co‑pilots like Man Numeric’s “AlphaGPT” on top. As one Man Group team puts it, “AlphaGPT does not replace human judgment but amplifies it.”

These models are increasingly organised into agentic” workflows. Rather than one model guessing everything, a system:

- Breaks a task into steps (e.g., gather data, screen ideas, run scenarios, draft a note).

- Routes each step to the most suitable tool.

- Applies pre‑defined limits around what the system can do without human approval.

This kind of orchestration, described in detail in the CFA Institute’s work on agentic AI for finance makes behaviour more predictable and auditable, which matters for both clients and regulators.

Human‑in‑the‑loop by design

In responsible set‑ups, humans still make the key decisions. AI’s role is to extend their reach, not replace their judgement.

- Advisers and portfolio managers set objectives, constraints and approval thresholds. They choose which models to trust and where to apply them.

- AI systems do the heavy lifting: scanning markets, crunching data, creating scenarios and drafting explanations.

- Humans review and sign off, using experience to challenge odd results, adjust assumptions and approve trades or recommendations.

How this looks in practice:

- For individuals: a robo‑adviser suggests a diversified portfolio based on your time horizon and risk tolerance. The system monitors markets and proposes rebalancing or tax‑loss harvesting; you approve changes that are explained in everyday language.

- For institutions: an analyst asks an AI research tool to review hundreds of earnings calls and sustainability reports. The system surfaces a shortlist of unusual statements or risk flags; the analyst then spends time on the 10 that genuinely matter.

Surveys of practitioners by the CFA Institute and regulators such as the FCA consistently find this augmentation model is how AI is actually used in front‑line investment teams and robo‑style platforms, with humans retaining accountability under regimes like the UK Senior Managers and Certification Regime. That human‑in‑the‑loop structure helps avoid blind reliance on a “black box” while still gaining scale and speed.

The real advantages when things are done well

When built on solid data and overseen properly, AI investing tools excel in a few areas:

Speed and coverage

Systems can read thousands of pages of filings or news in minutes, flagging only the most relevant passages.

Systematic screens can monitor global universes and niche segments that would be impossible for a small team to track manually. Leading hedge funds now routinely point their AI research stacks at petabytes of alternative data, using them to scan more than 50,000 instruments at once in some cases.

Pattern discovery and personalisation

Machine‑learning models can spot complex, non‑linear links between fundamentals, sentiment and price behaviour. They are far from crystal balls, but independent research from organisations like the CFA Institute indicates they can outperform simple linear models at cross‑sectional return prediction when carefully validated.

Co‑pilots can tailor explanations, portfolio breakdowns and “what‑if” views to a specific household or institutional mandate, improving understanding and discipline. Finextra’s work on digital and generative AI in investing shows how banks are already using generative models to turn traditional risk analytics into client‑specific visuals and narratives at scale.

Risk, compliance and operations

Surveillance tools can scan transactions and communications for potential misconduct, and triage KYC or AML alerts more efficiently. In banking, these AI‑driven controls are increasingly seen as part of a “compliance‑first” architecture, with auditable logs and clear decision trails.

Portfolio platforms can surface early‑warning risk indicators, map factor exposures and stress‑test portfolios across scenarios, in line with the kinds of model‑governance frameworks discussed in [AI in Asset Management.

Automation reduces manual errors and lowers operational costs, helping smaller firms and sophisticated individuals access workflows once limited to large institutions.

Industry reviews, from *When AI Actually Works in Finance* on Finextra to the FCA’s own AI speeches, all underline the same point: in investing, AI is most reliable as a process and risk‑management upgrade, not as an autonomous, market‑beating brain.

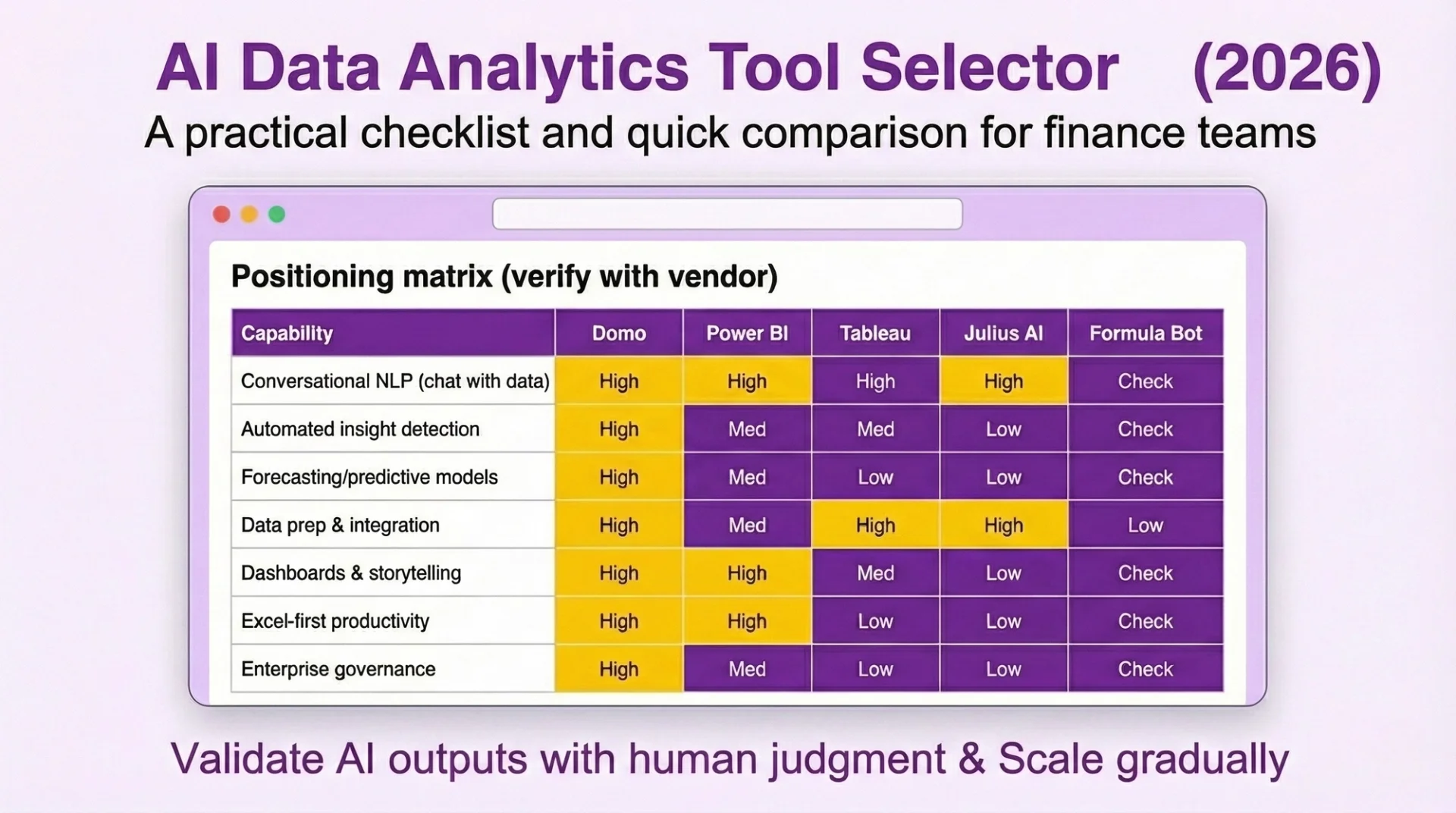

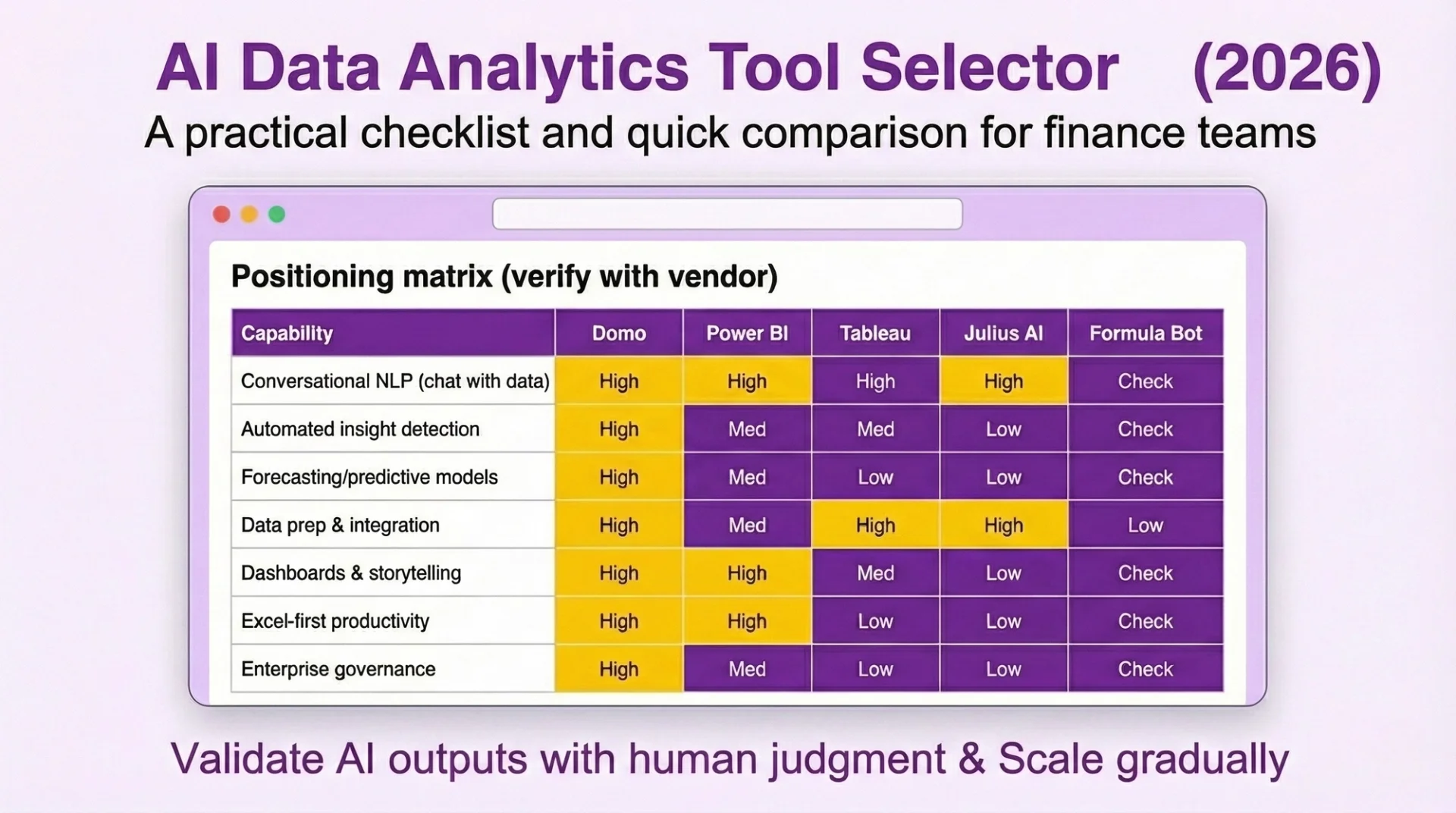

Download our AI Data Analytics Tool Selector (2026)

Download now

Download nowRisks, limits and how to spot trustworthy AI investing providers

Hype, “AI washing” and unrealistic expectations

AI investing sits in a classic hype cycle. Central banks and regulators have warned that optimism around AI could spill into bubbles, much like the late‑1990s tech boom. The Bank of England’s Financial Policy Committee, for example, has highlighted that valuations for some AI‑focused technology stocks “appear stretched”, leaving equity markets exposed if expectations about AI are scaled back. Equity prices in “AI plays” can move more on narrative than on cash flows, and those narratives can reverse quickly.

For investors, the key distinction is between:

- Long‑term AI themes - diversified exposure to companies genuinely reshaping productivity or building critical infrastructure.

- Speculative spikes – chasing thinly researched “AI winners” because they appear in every headline.

Alongside this sits AI washing: rebranding basic rules‑based tools as “advanced AI”. A robo‑adviser might simply follow a static model portfolio, or an app might trigger trades on simple indicators, yet the marketing language implies cutting‑edge machine learning. The CFA Institute describes AI washing as firms overstating or misrepresenting*how much AI really drives their investment process, often to appear more innovative than they are.

Why this matters:

- You may pay higher fees for little additional capability.

- You may trust the tool too much, assuming sophistication and risk controls that are not really there.

Treat “AI‑powered” as a prompt for questions, not a reason to suspend scepticism. As the FCA has put it, “machines do not have agency, humans do” – the responsibility for how AI is used still sits with the firm, not the buzzwords on the website.

Technical and market limits

Markets are difficult terrain for AI. Financial data are noisy, incomplete and constantly changing. A model that looks brilliant in a backtest may simply have memorised quirks in the past rather than learned robust patterns. Research shows that while machine‑learning‑based return models can outperform simple linear approaches, the gains are typically incremental and highly sensitive to data quality and regime shifts.

Generative AI adds a different set of limits. Systems that summarise research or answer questions can sound authoritative while being confidently wrong. If an investing app leans on such models without strong retrieval from trusted data and human checks, it can push users towards poor decisions.

Industry experience suggests that the most reliable gains from AI so far have come from workflow and risk improvements, not from autonomous stock‑picking, and that human - AI combinations still outperform AI alone.

There is also a human dimension:

- Over‑reliance on AI “co‑pilots” can erode basic analytical skills and market intuition.

- Polished dashboards may create a veneer of rigour – sophisticated charts and explanations that mask shallow analysis or fragile models.

For both individuals and institutions, AI should enhance judgement, not replace it.

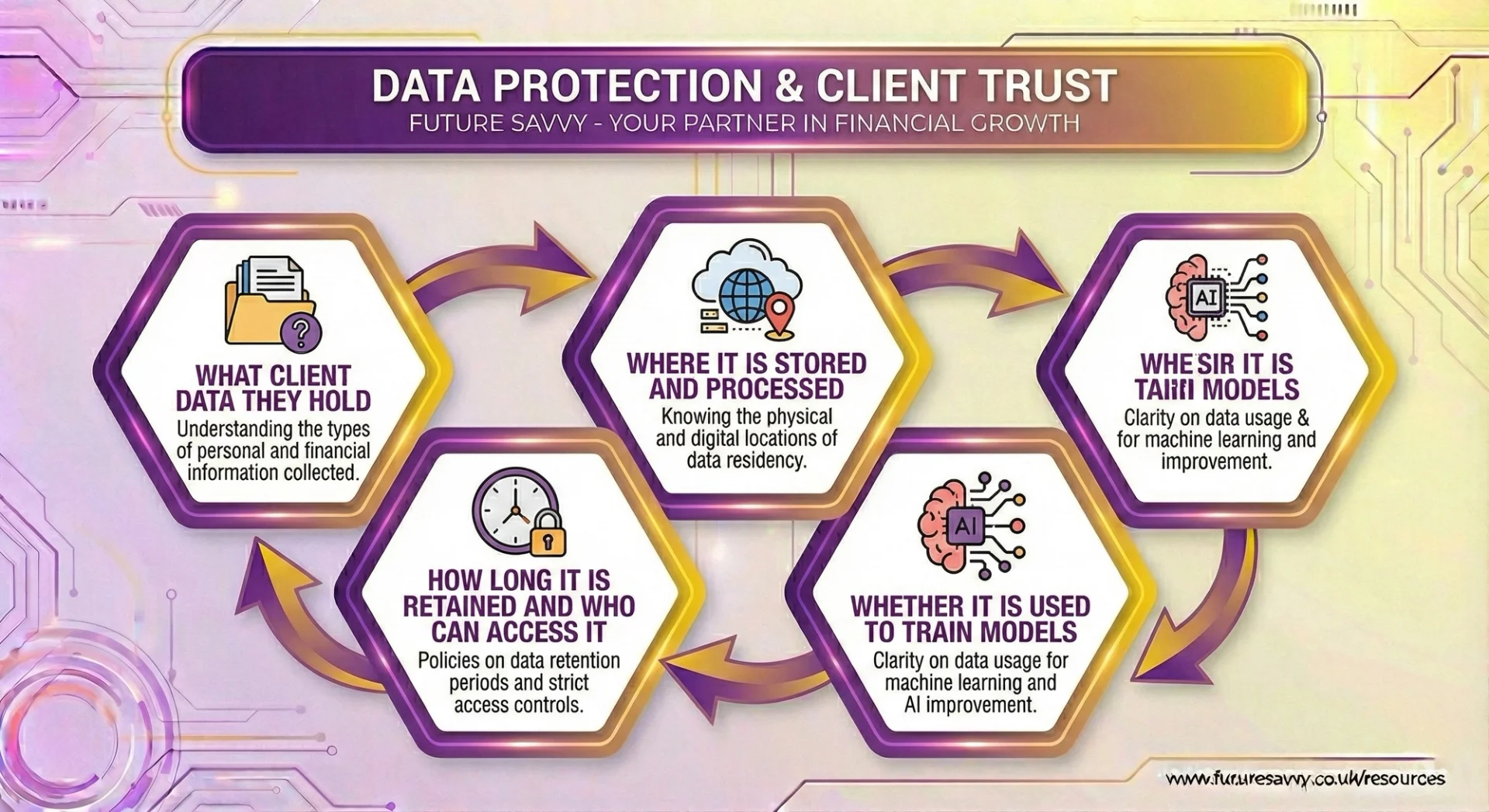

Regulatory, legal and privacy considerations

Regulation has not been suspended for AI. When a bank, robo‑adviser or trading platform uses AI, the usual duties still apply: ensuring products are suitable, treating customers fairly, communicating clearly, and monitoring for abuse or manipulation.

UK regulators have been clear that existing frameworks such as the Senior Managers and Certification Regime and the Consumer Duty already bite on AI: firms can develop AI services without new rules from us, but they remain fully accountable for outcomes.

Under regimes such as the UK’s Senior Managers and Certification Regime, named individuals remain personally accountable for outcomes, even if an algorithm made the initial recommendation.

Data protection is equally central.

If these answers are vague or buried in small print, treat it as a warning sign. Consumer warnings around unauthorised “AI investing” brands like Microsoft AI Invest underline that regulatory status and data handling are non‑negotiable basics, not nice‑to‑haves.

Practical checklist: how to identify trustworthy AI investing tools

Turning those risks into a short due‑diligence list:

Authorisation and reputation

In the UK, confirm the firm is authorised on the FCA register and not on the Warning List. Favour providers with a visible supervisory history, not freshly created shells.

Transparency about how AI is used

Ask which models are involved, what data they rely on, and how performance is validated.

Look for evidence of out‑of‑sample testing and live, benchmarked track records, not just carefully chosen backtests. Serious asset‑management shops typically emphasise robust backtesting, walk‑forward validation and ongoing monitoring rather than one‑off “perfect” simulations.

Explainability and auditability

The tool should show, in plain English, why a recommendation or trade was suggested: key drivers, assumptions and sensitivities. Institutional users should expect audit trails, model documentation and ongoing monitoring. Many banks now frame explainability, compliance and control as the three pillars of acceptable AI in financial services, precisely because opaque models are hard to defend to regulators and clients.

Controls and human oversigh

Check for human‑in‑the‑loop on actions that move money or materially change client outcomes. Providers should have clear kill‑switches, bias testing, and incident‑response plans.

Marketing discipline and outcomes focus

Red flags: vague “AI‑powered” claims, guaranteed returns, or pressure to sign up quickly. Positive signs: claims tied to concrete, verifiable outcomes such as lower fees, better risk monitoring, or time saved for clients and advisers.

Summarised, trustworthy AI investing tools usually share five traits:

- Clear regulatory status and accountable people

- Specific, comprehensible descriptions of how AI is used

- Evidence of robust validation and live performance

- Strong controls, data‑protection measures and human oversight

- Marketing that focuses on realistic, measurable benefits rather than magic‑sounding “alpha”

Keeping this lens in mind helps both individuals and institutions benefit from AI‑enabled investing without handing the wheel to a black box.

Using AI investing tools wisely: individuals vs institutions

For individual investors and advisers

Treat AI as a co‑pilot, not an oracle. Robo‑advisers and AI portfolio apps are strongest at turning your stated goals and risk tolerance into diversified, low‑cost portfolios and keeping them on track through automatic rebalancing and tax‑loss harvesting – very much in line with how mainstream providers and regulators now expect technology to be used in wealth management.

The FCA is clear that “machines do not have agency, humans do”, and that firms – and ultimately you – remain responsible for the outcomes, not the algorithm. Use these tools to do the heavy lifting, while you stay in charge of the big decisions.

Well‑designed tools can also help you *see* risk more clearly. Scenario testers that show what happens to your portfolio if markets fall or interest rates jump can make it easier to stick to a long‑term plan instead of reacting to headlines.

This mirrors how institutional managers now use AI for stress‑testing and “early warning” risk analytics rather than crystal‑ball prediction, as described by the CFA Institute’s work on machine learning in the investment process. Advisers can use AI assistants to explain these scenarios in plain language to clients.

Keep the basics front and centre. AI does not remove market risk or guarantee a fixed monthly income, and it cannot reliably time every market move. The principles still apply:

- Diversify across assets and regions

- Match investments to your time horizon

- Keep costs and unnecessary trading low

For institutional investors and professional teams

For institutions, the question is not “How do we add AI?” but “Where does AI remove bottlenecks and create measurable value?”. Prioritise workflows where scale and coverage matter: digesting documents and research, enhancing surveillance and compliance, and strengthening risk analytics and portfolio construction support. Set explicit metrics – for example, analyst hours saved, securities covered, or false positives reduced – and only scale projects that demonstrably move those numbers.

Governance must be built in from day one. Bring compliance, risk and technology into design discussions early, rather than signing off at the end. Partner for generic capabilities such as document intelligence or coding co‑pilots, but invest internally where you have genuine edge in data or domain expertise.

The FCA’s evolving expectations on explainability, bias and model governance for AI in asset management, summarised in its work on AI in financial regulation, are a good proxy for what institutional clients and boards will increasingly demand from investment teams as well.

Culture and skills are as important as models. Upskill teams in AI literacy, data quality, and model‑risk management so they can question outputs intelligently. Deliberately maintain “AI‑free” analytical practice – for example, manual case studies or investment committees that review decisions without system prompts – to preserve judgement and spot when tools are drifting or overfitting.

Where the trendline points

Over the next few years, expect more agent‑like systems that automate chains of tasks: gathering data, drafting research, proposing trades, even preparing client notes. In high‑stakes areas, though, these will sit inside predefined, auditable workflows with human sign‑off at key decision points, rather than acting as fully autonomous traders or portfolio managers. Regulators and industry bodies are converging on this pattern: AI is welcome, but it must be explainable, well‑governed and supervised.

The durable edge will come from combining:

- Trusted, well‑governed data

- Interpretable, controllable models

- Skilled people operating within robust governance

Firms and individuals who use AI to make their process more transparent, rather than more opaque, are likely to earn greater trust – and to be better placed when regulators, clients and markets ask to see how the results were actually produced. As one practitioner quoted by the CFA Institute puts it, “AI should sharpen our investment process, not obscure it.”

AI investing is not a single magic product, but a family of tools that sit across the investment process. Used well, robo‑advisers, algorithmic strategies and AI‑enabled portfolio co‑pilots can extend human capability rather than replace it.

Across these tools, the pattern is consistent:

- Benefits: speed, scale, wider coverage, more tailored portfolios, and higher risk and compliance checks. Professional investors are already using machine learning to enhance forecasting and risk management, with evidence that well‑designed models can incrementally improve risk‑adjusted returns over traditional factor approaches

- Risks: hype and AI washing, opaque models, patchy data, over‑reliance on automation, and regulatory or privacy missteps.

The real edge in AI Investing lies in keeping humans firmly in charge — setting objectives, challenging outputs, and insisting on transparency and measurable results. In practice, that is how leading hedge funds use AI: as a powerful research and execution layer that still sits under human portfolio management and risk committees, not as a fully autonomous money‑making machine.

Individuals should always sanity‑check any “AI” services they use against a clear checklist, and if starting out, favour regulated robo‑advice or established platforms. As AI matures, explainable and auditable systems will define trusted investing. They will be less of a novelty, more part of the baseline investors quietly expect.

Future Savvy can help you and your team make the most of AI and grow your business. Contact us today to learn more.

Frequently Asked Questions (FAQ)

It uses machine learning and more data signals to predict demand at a finer level (e.g., SKU–store–channel) and update forecasts more often.

By detecting shifts early and turning forecasts into automated, controlled actions like reorders, transfers, and allocation changes.

Clean POS and inventory data, promotion/pricing flags, lead times, and consistent product/location hierarchies- then add external signals as needed.

Poor data quality, low adoption, over-automation without guardrails, and model drift that goes unnoticed until service or inventory worsens.

Related Articles

L&D Insights

L&D Insights L&D Insights

L&D InsightsBest AI Tools for Data Analytics in Finance 2026

AI is reshaping how finance teams analyse data - moving beyond manual spreadsheets to faster, smarter insights powered by machine learning and natural language. This blog post breaks down what AI data analytics is in 2026, the must-have features to look for, and how the “full-stack analyst” is emerging inside modern tools. You’ll also get a practical review of the best no-code AI analytics platforms for finance - plus tips to implement them confidently.

Tips & Tricks

Tips & TricksMastering Copilot Prompts: A Beginner's Guide to Getting Accurate and Useful Results

The article explains what a Copilot prompt is and why clarity and specificity dramatically improve results. It shows how adding audience, tone, length, and format turns vague requests into accurate outputs, contrasting weak vs. strong prompts. It lists common mistakes - being vague, bundling too many tasks, omitting context or target audience, and failing to critically review AI output. It emphasises prompting as a valuable workplace skill; beginners should start small, reuse and adapt prompts, and remember AI can err, so human judgment remains essential.