- TIPS & TRICKS/

- Understanding and Managing Context Windows: How to Keep Your Copilot Conversations Focused?/

Understanding and Managing Context Windows: How to Keep Your Copilot Conversations Focused?

- TIPS & TRICKS/

- Understanding and Managing Context Windows: How to Keep Your Copilot Conversations Focused?/

Understanding and Managing Context Windows: How to Keep Your Copilot Conversations Focused?

If you have spent any significant time using Microsoft Copilot, you have likely encountered this frustrating scenario: you are deep into a productive chat, refining a document or analysing data. You ask a follow-up question, and Copilot responds with a generic answer, seemingly having forgotten everything you discussed five prompts earlier.

This "conversation drift" or "AI amnesia" is not a bug. It is a fundamental architectural constraint of all Large Language Models (LLMs) known as the context window. In this article, we’ll explain what context windows are, how they work behind the scenes, the challenges they pose (from token limits to privacy), and best practices to help you maximise Copilot’s effectiveness.

For more expert tips on how to boost your Copilot efficiency, make sure to check out our instructor-led training courses!

What Are Copilot Context Windows?

Defining Tokens: The Building Blocks of AI Memory

A context window refers to the amount of text (measured in tokens) that an AI model can consider at one time. Tokens are pieces of words; for example, “networking” might be two tokens (“network” + “ing”). As a rule of thumb, 1 token is roughly 3/4 of a word in English. So if a model has an 8,000-token context window, that’s about 6,000 words of text it can handle in its “working memory” before it starts forgetting old content.

In an enterprise setting, managing this limitation is the single most critical skill for productive AI adoption. It is the difference between a tool that accelerates work and one that creates constant friction. Mastering this goes beyond simple "prompt engineering". It is the vital discipline of context management.

Context Window vs. Grounding

This is the most important concept for M365 Copilot users to understand. Your organisation's 10 TB of data in SharePoint is not your context window.

Context Window: This is the finite "working memory" of the LLM, measured in tokens. It is what the model can "see" at the exact moment it generates a response.

Grounding: This is the process of retrieving information from your organisation's persistent knowledge.

Microsoft 365 Copilot is a Retrieval Augmented Generation (RAG) system. It does not put your entire file library into the model's brain. Instead, it performs a multi-step process:

- Search: It interprets your prompt and uses the Semantic Index for Copilot to search the Microsoft Graph (your emails, files, chats, calendar).

- Retrieve: It finds the most relevant snippets of information.

- Augment: It injects only those retrieved snippets into the context window, alongside your prompt.

This is a common puzzle related to context windows. This means Copilot can read a 300-page file, but it will only inject the most relevant passages (e.g., headings, intro, conclusion) into the finite token budget to generate a summary.

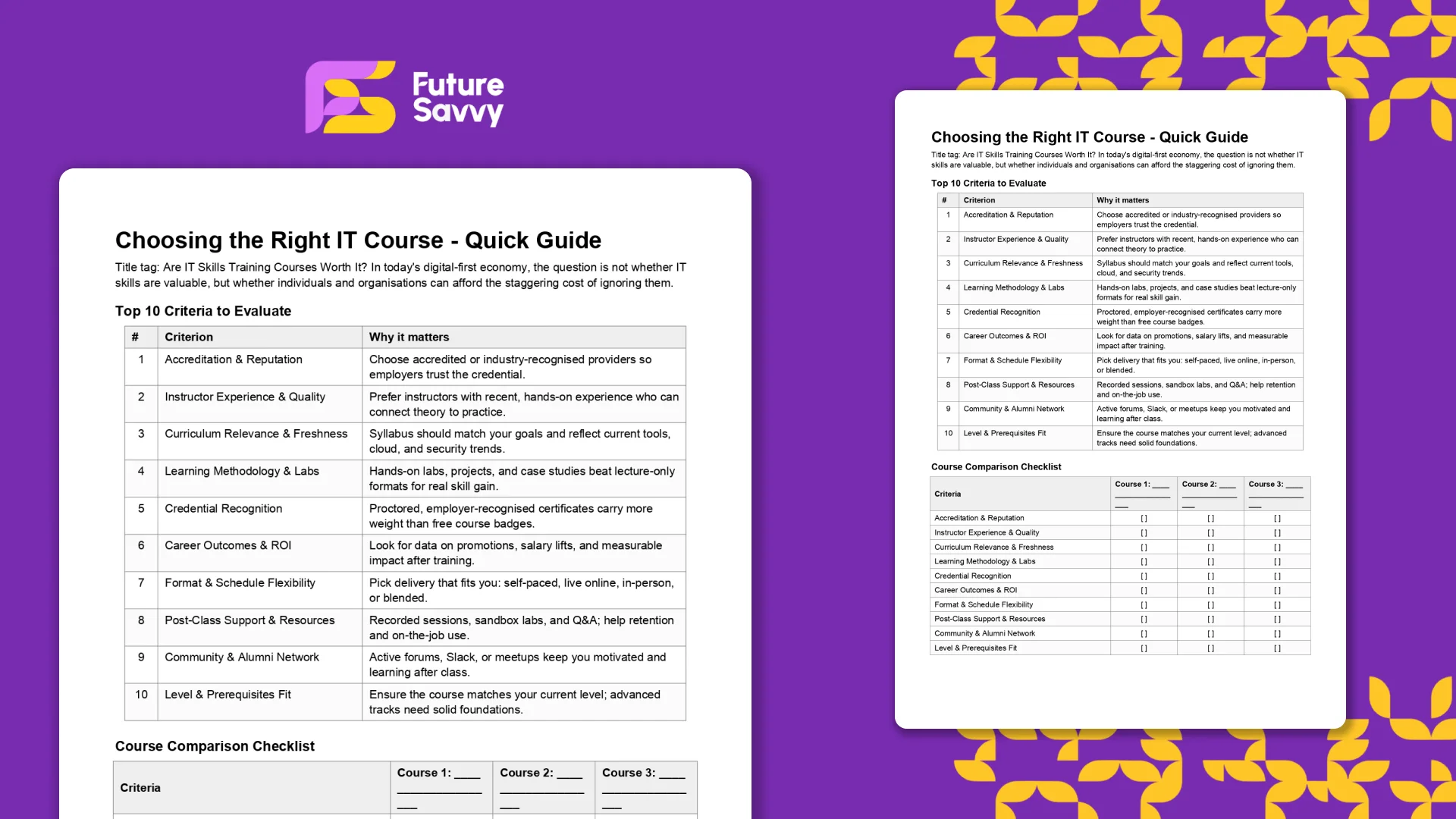

Download our Quick IT Course Guide

Download now

Download nowThe Three Types of Copilot Context

To be effective, you must be aware of the three types of context Copilot is balancing:

- Session Memory (Chat History): This is the "ephemeral" log of your current conversation, which allows for natural follow-up questions. It is also the first thing to be truncated or "forgotten" when the window fills.

- Grounded Retrieval (Microsoft Graph): This is the persistent, permission-trimmed data from your tenant (OneDrive, SharePoint, Teams, Outlook) and the web. This is the core of M365 Copilot's power.

- Plug-in/Tool Context (Extensibility): This is real-time data pulled from external systems via Copilot Studio connectors. This allows Copilot to ground answers in data from sources like Salesforce, ServiceNow, or internal SQL databases.

How Do Copilot Context Windows Work?

Understanding the mechanics of this process is key to controlling it. Every prompt initiates a complex, invisible sequence of events that allocates your finite token budget.

The Token Budget: How Copilot Allocates Memory

Think of the model's total context window as a "token budget". This total budget is fixed and must be divided among multiple components. The available space for your data is what is left over.

A conceptual formula for this trade-off is:

Available_Tokens_for_User_Data =

Max_Model_Context_Window

- (System_Instructions + Safety_Guardrails + Tool_Definitions)

- (Chat_History)

- (User_Prompt)

- (Reserved_Response_Budget)

This model explains why things fail. If your Chat_History is very long and your User_Prompt is verbose, the Available_Tokens_for_User_Data (the space for retrieved documents) becomes tiny. This either forces the RAG system to be hyper-selective - leading to a weak, ungrounded answer - or it shrinks the Reserved_Response_Budget, resulting in a truncated, cut-off response.

Grounding Mechanics: The Microsoft Graph and Semantic Index

When you interact with Copilot in M365, several processes happen in milliseconds:

- Prompt: A user enters a prompt in an M365 app like Teams or Word.

- Pre-processing (Grounding): Copilot preprocesses the prompt and queries the Microsoft Graph.

- Retrieval: The Semantic Index for Copilot finds the most relevant, permission-trimmed data. This index understands conceptual relationships (e.g., a search for "praise" can find documents using "elated" or "amazed"), not just keywords.

- Augmentation: Copilot augments the original prompt by injecting these retrieved snippets of data into the context window.

- LLM Call: This new, combined prompt (User_Prompt + Retrieved_Data) is sent securely to the LLM.

- Post-processing: Copilot receives the LLM's response, checks it for safety and compliance, and presents it to the user with citations pointing back to the source documents.

Truncation and Retrieval Strategies

You need to be aware that when the context window is too large, Copilot employs several strategies:

- File Grounding Cap: Copilot will not use thousands of files. If a user's prompt references a huge number of documents, M365 Copilot will select the ~20 most relevant files and ignore the rest.

- Chunking: For very long documents (up to the 1.5M-word limit), the RAG system breaks the text into smaller, semantically-related chunks for indexing.

- "Lost in the Middle": When a long document or chat history is truncated to fit the window, LLMs have a known weakness: they tend to prioritise information at the very beginning and very end of the context, effectively "forgetting" information from the middle.

Limitations and Challenges of Copilot Context Windows

Whilst powerful, this architecture presents clear limitations and risks that technical managers must mitigate.

Practical Limits: Word Counts, File Caps, and Latency

As of October 2025, the following practical limits are key operational thresholds:

- Document Summarisation/Q&A: ~1.5 million words or 300 pages.

- Document Rewriting (in-app): ~3,000 words.

- File Grounding (M365 Chat): ~20 most relevant files.

- File Upload (M365 Chat): Up to 512 MB per file (this limit is rolling out).

- Copilot Studio (Custom Agents): Up to 8,000 characters for instructions and grounding in up to 100 SharePoint files.

Practical Limits: Word Counts, File Caps, and Latency

As of October 2025, the following practical limits are key operational thresholds:

- Document Summarisation/Q&A: ~1.5 million words or 300 pages.

- Document Rewriting (in-app): ~3,000 words.

- File Grounding (M365 Chat): ~20 most relevant files.

- File Upload (M365 Chat): Up to 512 MB per file (this limit is rolling out).

- Copilot Studio (Custom Agents): Up to 8,000 characters for instructions and grounding in up to 100 SharePoint files.

“Thin” Context and Hallucinations:

On the flip side of the token limit issue, providing too little or ambiguous context can also be problematic. If Copilot doesn’t have specific information to ground an answer, it may produce a plausible-sounding but incorrect response – a phenomenon known as hallucination.

For example, asking “Explain the client’s requirements for Project Y” in Copilot Chat without providing or referencing any documents might lead it to guess or generalise, possibly giving a wrong summary. The AI will try to be helpful even if the context window is essentially empty or missing key facts.

Licensing and Feature Availability

Not all Copilot users have the same abilities, which indirectly affects context usage. Microsoft 365 Copilot add-on license users get the full Graph-integrated experience (the Work tab in Copilot Chat, ability to reference Teams messages, meetings, etc.), whereas users without that license are limited to public web context or their own provided text.

If you don’t have a Copilot license, you effectively have a smaller context scope (only web data, or whatever you paste in). Those with the license have a much richer context via their tenant data. Additionally, Microsoft has introduced priority access vs standard access modes. Priority access might mean faster responses or early feature releases, whereas standard could mean slower retrieval or lower rate limits.

Best Practices for Maximising Context Window Efficiency

Controlling Copilot's context is a skill. This playbook provides a concrete process for getting focused, accurate, and reliable results.

A 7-Step Playbook for Focused Copilot Prompts

- Scope Your Goal: Be specific. Do not ask, "What's new?" Ask, "What updates did Sam send via email about 'Project Titan' in the last 7 days?".

- Ground with Sources: Explicitly tell Copilot what "library" to use. Reference specific file paths (/Sales/Q4-Forecast.xlsx), meeting names ("the Teams meeting on 12 October"), or documents.

- Provide Context: Explain why you need the information ("I am preparing a client presentation") and what "persona" to adopt ("Act as a senior financial analyst").

- Chunk Your Tasks: Do not ask for a summary, analysis, and a PowerPoint in one prompt. For a long document, ask Copilot to summarise it first. Then, in a new prompt, ask it to extract action items from the summary.

- Constrain the Output: Define the format to save token budget. "Provide the answer as a 3-column table," "Limit the summary to 5 bullet points".

- Use Consistent Identifiers: When discussing a specific document across a long chat, give it a shorthand name ("Call this 'Policy-v4'") and use it consistently.

- Verify and Critique: Do not trust the first output. Ask Copilot to check its own work.

Always remember to run a 'Lost-Information Check'. After Copilot gives you a summary of a long document, force it to re-examine the context by asking it to self-critique. This counters the "lost in the middle" context window problem. Try these prompts:

- "Based on the source document, what key assumptions did you make in your summary?"

- "What important topics or quantitative data from the document did you choose to exclude from your response?"

Context windows might be an “under the hood” technical concept, but for end-users and admins guiding Microsoft Copilot deployments, they make a tangible difference in daily use. Knowing the limits and behaviours of Copilot’s context window helps explain why it sometimes forgets things or produces off-base answers – and more importantly, how to prevent those issues.

For teams rolling out Copilot, the next steps should be to teach everyone these best practices. Get in touch today to find out how we can help you and your team level up!

Frequently Asked Questions (FAQ)

Survey open-end coding, social listening summaries, feedback clustering, and trend/horizon scanning.

No - use them as hypotheses, then verify against raw data and other sources.

Confident-sounding hallucinations and oversimplified narratives that aren’t grounded in evidence.

Add a repeatable loop: data readiness → AI first pass → human review → triangulation/calibration.

Related Articles

Tips & Tricks

Tips & TricksMastering Copilot Prompts: A Beginner's Guide to Getting Accurate and Useful Results

The article explains what a Copilot prompt is and why clarity and specificity dramatically improve results. It shows how adding audience, tone, length, and format turns vague requests into accurate outputs, contrasting weak vs. strong prompts. It lists common mistakes - being vague, bundling too many tasks, omitting context or target audience, and failing to critically review AI output. It emphasises prompting as a valuable workplace skill; beginners should start small, reuse and adapt prompts, and remember AI can err, so human judgment remains essential.

Tips & Tricks

Tips & TricksDeploying Copilot Effectively: A Guide for IT Managers on Integration, Training, and Change Management

Microsoft 365 Copilot can super-charge Word, Excel, Outlook, PowerPoint, and Teams, but IT managers must align licensing, data governance, and clear business goals before launch. In this article, we discuss how engaging stakeholders early, piloting with a small cross-functional group, and phasing the rollout lets teams refine guidance and measure real productivity gains. Role-specific, hands-on training - prompt-engineering tips, quick-start resources, and “Copilot champions” - converts into confident daily use while resolving emerging user challenges.

Tips & Tricks

Tips & TricksCopilot vs. ChatGPT vs. Gemini: How to Choose the Right AI Assistant for Your Task

Microsoft Copilot, OpenAI’s ChatGPT, and Google’s Gemini are leading AI assistants, each excelling in different environments. Copilot integrates deeply with Microsoft 365 to automate documents, data analysis, and email, while ChatGPT shines in open-ended conversation, creative writing, and flexible plugin-driven workflows. Gemini prioritises speed and factual accuracy within Google Workspace, offering powerful research and summarisation capabilities. Choosing the right tool depends on your ecosystem, need for customisation, and whether productivity, creativity, or precision is the top priority.