- TIPS & TRICKS/

- Top AI Asset Management Platforms Reviewed/

Top AI Asset Management Platforms Reviewed

- TIPS & TRICKS/

- Top AI Asset Management Platforms Reviewed/

Top AI Asset Management Platforms Reviewed

AI asset management platforms have moved from experimental quant projects to everyday tools for wealth managers, CIO offices, and institutional teams. Fee pressure, tougher regulation, and the grind of finding consistent alpha mean firms are looking for ways to do more with the same headcount, while documenting every decision.

As the CFA Institute notes, AI is now “permeating the entire investment value chain”, from research to execution, rather than sitting in isolated innovation units. In response, mainstream portfolio and risk systems now ship with embedded machine learning, alternative data ingestion, and predictive analytics, rather than bolt‑on “AI labs” that sit off to the side.

This article looks at those platforms as products, not magic alpha machines. The focus is on what they can actually do:

- How they construct and rebalance portfolios under real‑world constraints

- The depth and transparency of their risk analytics

- How far they support personalisation and suitability at scale

- Their integrations, deployment options, fees, and governance features

The central theme is augmentation, not replacement. The leading tools are explicitly built as human‑in‑the‑loop co‑pilots: they surface signals, generate candidate portfolios, flag risks, and automate much of the grunt work, but investment committees still set mandates and sign off changes. That approach aligns with supervisory expectations in major markets, where regulators such as the UK’s Financial Conduct Authority and the EU’s ESMA

emphasise accountability, explainability, and robust oversight when firms deploy AI.

Throughout the review, platforms are compared on how well they support that shared workflow – portfolio construction, risk, personalisation, integrations, fees, and oversight – so you can narrow a shortlist that fits your existing investment process rather than outsourcing judgement to a black box.

Download our AI Asset Management Platforms Quick Guide

Download now

Download nowPlatform Types and Selection Criteria

Main types of AI asset management platforms

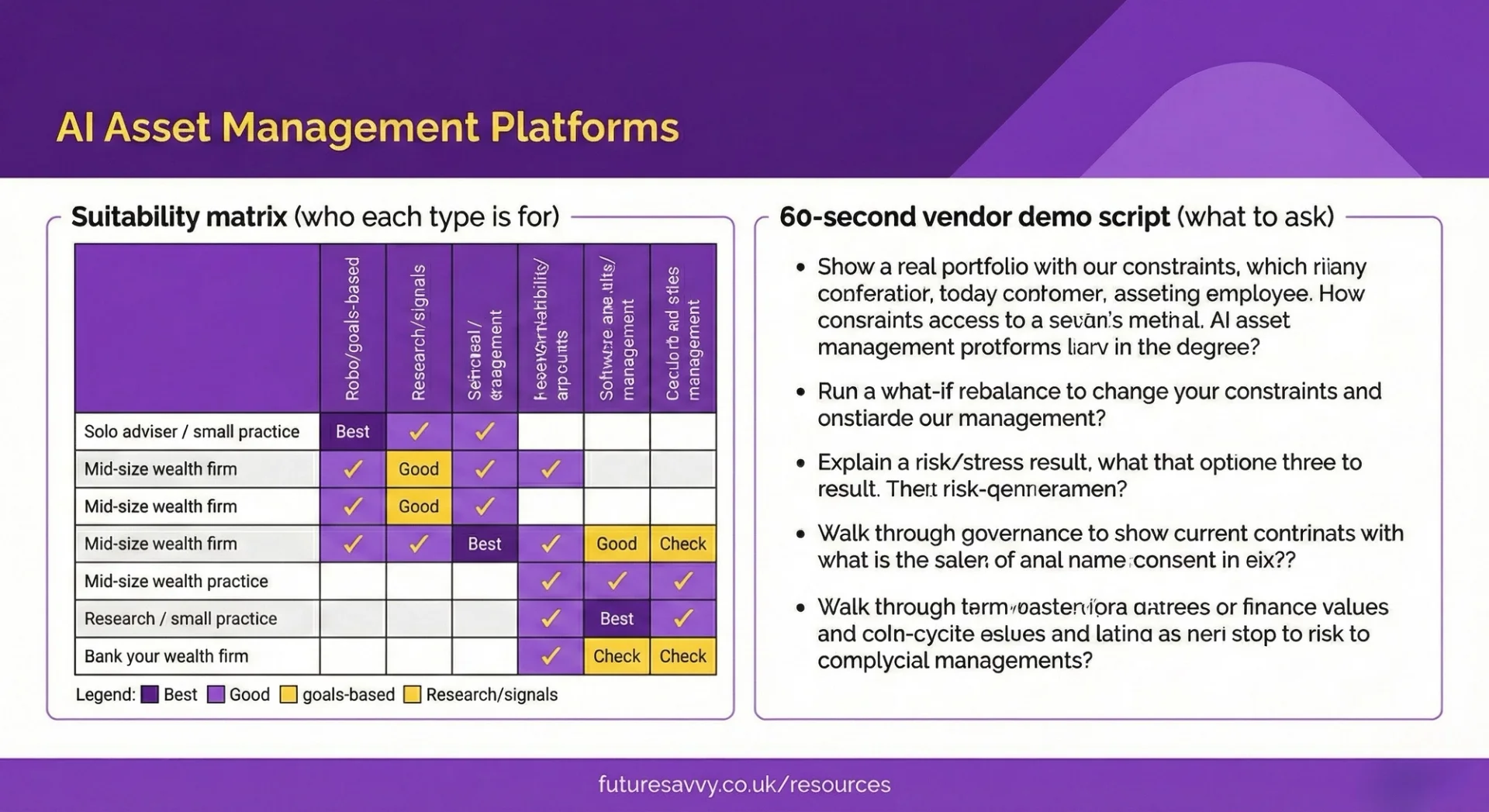

Think of the market in four broad buckets. Most vendors blur these lines, but this mental map helps you compare like with like.

Robo‑style wealth and goals‑based platforms

Designed for advisers, wealth managers, and smaller firms. They focus on turning client fact‑finds into automated portfolio proposals, mapping risk profiles to model ranges, and producing client‑friendly, compliant reports. The AI typically augments suitability checks, tax‑aware rebalancing, and narrative generation rather than replacing adviser judgement. This aligns with how leading digital wealth platforms are described by industry reviewers such as Morningstar and reflects the broader trend towards “hybrid advice” highlighted by the CFA Institute.

Institutional research and signal platforms

Built for quant teams, hedge funds, and institutional managers. Their edge lies in discovering and testing signals across large data sets, constructing multi‑asset models, and running realistic backtests with transaction costs, liquidity limits, and borrow constraints. Human‑in‑the‑loop oversight and replicable research workflows are key selection points.

As one senior quant CIO at a European asset manager puts it, “The real value is not a single signal, but an industrialised research pipeline you can audit and rerun when conditions change.” Platforms in this category increasingly mirror the research standards seen in offerings such as BlackRock’s systematic investing tools.

Risk and allocation co‑pilots for CIOs and multi‑asset teams

These tools act as allocation engines and scenario labs. They help CIOs set policy portfolios, explore regime shifts, stress test across macro and market shocks, and translate top‑down views into implementable, constraint‑aware portfolios. The AI focuses on regime detection and tail‑risk diagnostics, with committees retaining final say. This emphasis on scenario analysis and governance echoes the priorities outlined by regulators such as the FCA around algorithmic decision‑making and risk control.

Enterprise AI layers on top of existing PMS/OMS stacks

Here the priority is integration and workflow. These platforms sit over existing systems, standardising data, orchestrating processes, and exposing modular AI components (optimisers, risk engines, suitability checks) via APIs. Governance, security, and model lifecycle management tend to matter more than any single “smart” algorithm, consistent with enterprise AI adoption patterns seen in large buy‑side firms and documented in vendor‑neutral surveys from organisations such as the Investment Association

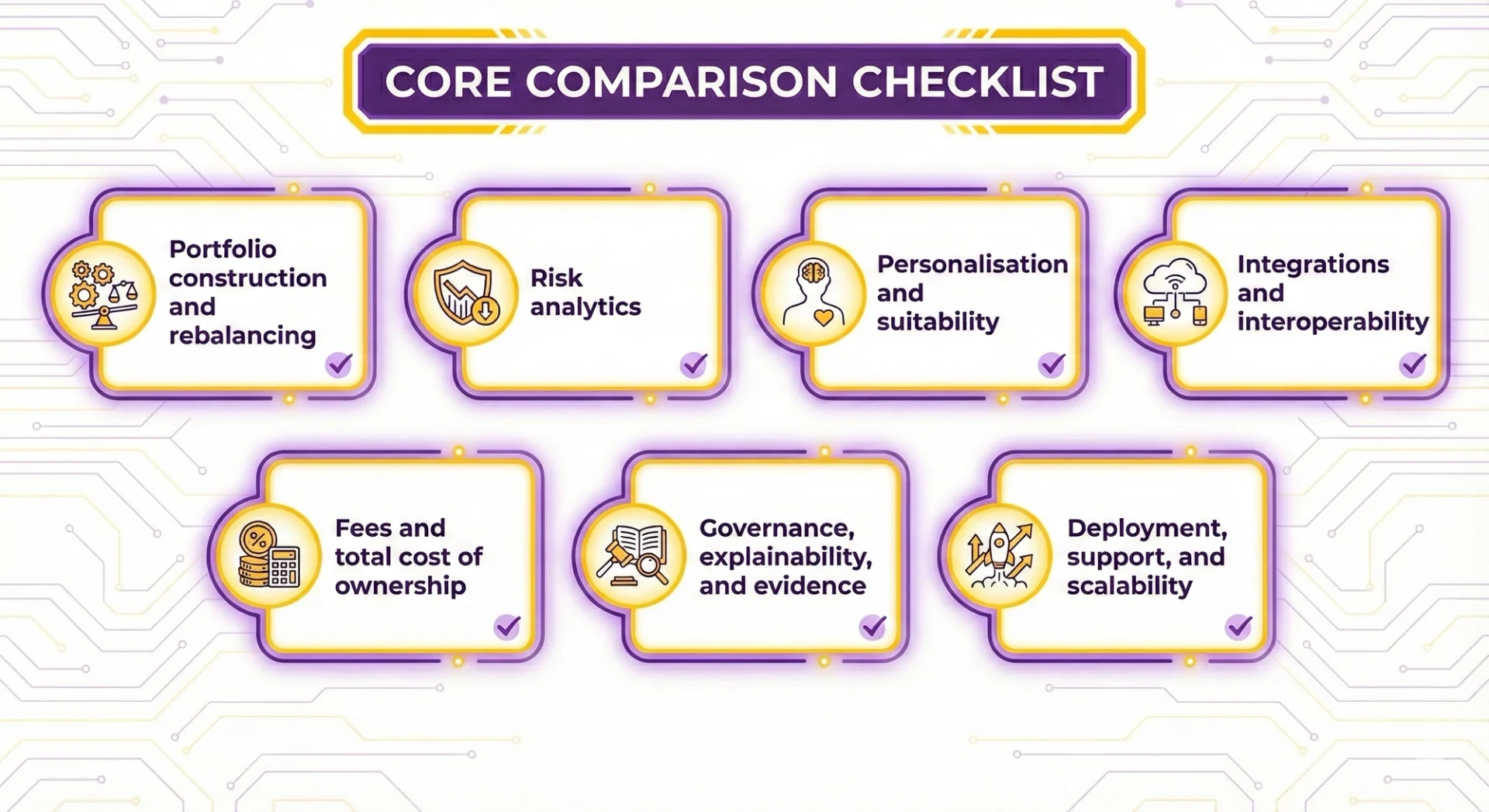

Core comparison checklist

Across all types, you can use a single yardstick to build your shortlist:

Portfolio construction and rebalancing

Check which optimisation methods are available, how easily you can encode mandates and ESG rules, and whether turnover, trading costs, and tax are modelled realistically. Test how far you can adjust templates before custom engineering is needed.

Risk analytics

Look for position‑to‑factor drill‑downs, pre‑ and post‑trade risk, and flexible stress testing. Forward‑looking features such as regime detection are useful, but only if assumptions, calibration and limitations are transparent.

Personalisation and suitability

Assess how goals, risk budgets, and exclusions are translated into portfolios at scale. Strong platforms combine mass customisation with clear explanations and rule libraries that keep compliance comfortable.

Integrations and interoperability

Prioritise proven connectivity to OMS/EMS/PMS, custodians, data vendors, and CRM, plus an API‑first design with robust security and auditability. Ask for concrete examples, not just slideware.

Fees and total cost of ownership

Go beyond licence or AUM‑based pricing. Include data and compute pass‑throughs, implementation and integration effort, and any vendor‑specific tooling that might create lock‑in.

Governance, explainability, and evidence

Demand visible data and model governance, explainable outputs you can use with clients and regulators, and performance evidence that can be reproduced on your own data.

Deployment, support, and scalability

Finally, check deployment options (cloud, on‑prem, hybrid), SLAs and resilience, multi‑asset/multi‑jurisdiction coverage, and the vendor’s ability to support training, pilots, and phased roll‑outs.

Platform A: Configurable portfolio engine for institutional users

Platform A sits firmly in the “quant co‑pilot” camp: it is built for CIO offices and institutional managers that want to encode their own views and policies into a powerful multi‑asset optimiser, rather than accept a pre‑packaged house view. This aligns with how many large managers are now using AI as a decision‑support layer rather than a black box, a pattern highlighted in the CFA Institute’s overview of AI in asset management.

On the portfolio construction side, it offers a full menu of optimisation methods, from classic mean‑variance and robust approaches to factor‑aware and machine‑learning driven engines. The key strength is how these optimisers handle messy, real‑world constraints. You can typically layer in:

- Detailed constraint libraries: issuer and sector caps, hard client mandates, ESG and exclusion lists, in line with the kind of policy rules set out in institutional investment governance codes.

- Practical frictions: turnover limits, liquidity filters, transaction cost and tax impacts, reflecting the emphasis on implementation costs in practitioner guides such as the [BlackRock primer on portfolio construction.

- Rebalancing logic that mixes rules (drift bands, risk budgets) with scenario‑aware overrides when markets dislocate.

Risk analytics go well beyond headline numbers. Factor decomposition drills from portfolio down to individual positions, exposing exposures and concentrations in language risk teams already use. Scenario libraries cover macro, rates, credit, and liquidity shocks, with the option to design bespoke stress tests that reflect your own playbook.

This is broadly consistent with how regulators expect firms to evidence risk understanding; for example, the FCA’s guidance on stress testing and scenario analysis

stresses linkage between models, governance, and decision‑making. Importantly, pre‑ and post‑trade checks are wired directly into workflows so breaches trigger approvals rather than after‑the‑fact reports. As one head of risk at a UK asset manager put it, “the control is only real if the trade cannot go through without passing the model and the human sign‑off.”

The trade‑off is complexity. Platform A rewards firms that have quant or risk specialists to tune models, police data inputs, and challenge AI‑generated recommendations. It is a strong fit if you want:

- Human‑in‑the‑loop oversight anchored in your existing governance.

- Evidence that risk models behave sensibly across regimes, not just in a glossy backtest.

Platform B: Template‑driven portfolios with embedded risk controls

Platform B targets wealth and retail‑focused managers who need consistency and speed more than bespoke research infrastructure. It leans on curated model portfolios and allocation templates, allowing advisers to roll out suitable portfolios at scale with minimal configuration.

Portfolio construction is primarily template‑driven: you start from approved models and adjust within defined ranges rather than building from scratch. Simple risk budgets, drift bands, and periodic review cycles trigger suggested rebalances, with the AI layer helping to prioritise which accounts to action and when. This mirrors common approaches in regulated advice markets, where standardised model portfolios are used to demonstrate suitability and control dispersion of outcomes.

Risk analytics surface standard metrics such as volatility, value‑at‑risk, and factor exposure in dashboards designed for advisers, not quants. The emphasis is on clear alerts when portfolios breach pre‑set risk, suitability, or mandate thresholds, supported by approval workflows and audit trails that satisfy compliance teams. These controls echo patterns described in wealth management case studies from large platforms that use AI primarily to enhance monitoring, rather than to redefine investment processes.

The compromise is depth. You get less control over optimisation settings, custom factor definitions, or advanced predictive risk features. Platform B tends to suit firms that:

- Want tight guardrails, rapid deployment, and consistent client journeys.

- Prefer configuration of templates over building and validating their own risk models.

How to compare platforms on this dimension

When you view these platforms through portfolio construction and risk engines, the key is not which is “smarter” but which better reflects how you actually run money.

Useful questions for vendors include:

- How easily can we encode our investment policy, client mandates, ESG rules, and liquidity limits?

- Can we run realistic, tax‑aware and cost‑aware “what‑if” rebalances before committing trades?

- How transparent are your optimiser assumptions and backtests, and can we reproduce results with our own data?

Signs of good fit tend to cluster around:

- The right balance between template convenience and room for custom rules and scenarios.

- Clear evidence that risk models and stress tests stay robust across different market regimes, not only in one carefully chosen sample.

Framed this way, Platform A and Platform B are not direct substitutes: they anchor opposite ends of a spectrum between configurability and simplicity. Your shortlist should reflect where your organisation needs to sit on that spectrum, given your governance, team skills, and client promises.

Platform C: Mass‑customisation for wealth and distribution partners

Platform C is pitched at firms that need thousands of subtly different portfolios, all sitting on a controlled, central rule set. It targets private banks, advisers, and distributors who want to white‑label digital experiences without building the engine themselves.

On the personalisation side, it starts from goals rather than risk buckets alone, in line with the shift towards goals‑based wealth management seen across leading private banks. Advisers capture time horizons, cash‑flow needs, and risk budgets, then the optimiser translates those into portfolio settings and rebalancing rules.

Suitability data from KYC flows directly into these settings, reducing the gap between fact‑find, risk profiling, and the actual model used in production. ESG and thematic views are layered via rule‑based tilts and exclusion lists that can be configured per client or household, but anchored to firm‑wide templates, mirroring how SFDR and UK SDR frameworks are pushing firms towards more structured sustainability policies.

For client experience, the emphasis is on distribution‑friendly packaging:

- White‑label portals and branded adviser dashboards.

- Plain‑language rationales for portfolio changes, risk shifts, and performance.

- Reporting in multiple formats (portal, PDF, data feeds) mapped to local regulatory templates.

Governance is handled through central rule libraries and audit trails, reflecting the sort of “embedded controls” approach described in the CFA Institute’s work on AI in investment management. Compliance teams can lock in policies (for example, ESG exclusions, concentration limits, suitability thresholds), then see how those rules flow from client profile to model to individual trade decisions.

This helps firms scale personalisation without losing line of sight over what has actually been implemented. As one large‑bank COO put it in a recent industry panel, “If you cannot trace a client outcome back through the rules that produced it, you have not automated – you have just outsourced your risk.”

Platform D: Enterprise AI layer with strong MLOps and explainability

Platform D is closer to an “AI operating system” for large asset managers than a pre‑built portfolio factory. It assumes you already have models, processes, and oversight in place, and want a governed environment to industrialise them.

Its core strength is formalised MLOps and model governance, echoing patterns seen in regulated financial services AI deployments. Data moves through curated feature stores with traceable lineage and automated quality checks. Models are versioned, with explicit approval workflows and champion/challenger testing so new approaches can be trialled alongside incumbents. Continuous monitoring tracks drift and performance decay, with retraining policies defined up front rather than improvised after something breaks.

Explainability is treated as a first‑class output. Every allocation and security‑selection decision can be decomposed into drivers, then surfaced as narratives that are understandable by committees, clients, and regulators. The language and evidence are designed to sit comfortably with obligations such as Consumer Duty and MiFID II suitability and cost‑benefit expectations, and with emerging AI governance principles from regulators.

The trade‑off is that Platform D is not prescriptive about model portfolios or house views. It suits institutions that want to wrap AI and automation around existing CIO processes without ceding control to a vendor template.

How to compare platforms on this dimension

When you move beyond headline “AI” labels, the key differences lie in how well each platform can scale tailored portfolios while staying explainable and governable.

On personalisation, probe:

- How many portfolio variants can be run before rebalancing and oversight become fragile?

- Can advisers and portfolio managers retrieve clear, client‑ready explanations for AI‑driven changes?

On governance, focus on:

- Whether compliance can see and control model versions, approvals, and change logs from a single place.

- How bias, fairness, and suitability are monitored across segments as you add new models, rules, or product ranges.

Putting these questions to vendors will quickly reveal whether a platform behaves like a controlled production environment or a black box with a glossy front end.

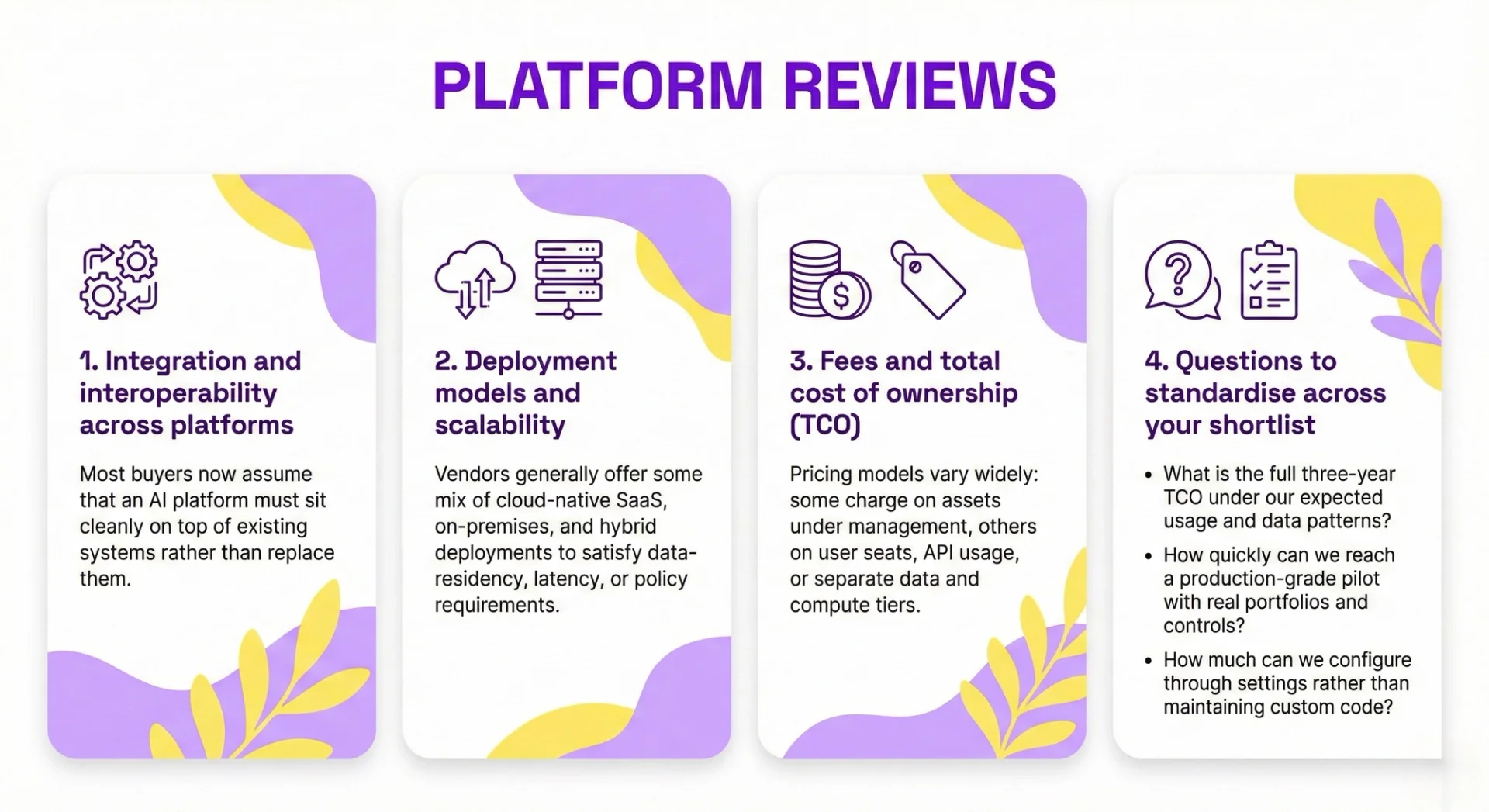

Platform Reviews III: Integrations, Deployment, and Economics

Even when AI features look similar on paper, platforms often live or die on how well they plug into your stack, scale in production, and price their offering. This is where shortlists usually narrow sharply.

Integration and interoperability across platforms

Most buyers now assume that an AI platform must sit cleanly on top of existing systems rather than replace them. Typical touchpoints include:

- Portfolio, order and execution management systems

- Custodians, fund administrators and market‑data vendors

- CRM, reporting, and client‑portal tooling

The more mature platforms usually share three traits:

- API‑first design with clear documentation, test sandboxes and SDKs

- Support for common standards, plus event‑driven updates that keep risk and exposures current intra‑day

- Enterprise security by default – single sign‑on, role‑based access, and auditable change logs

Industry surveys consistently show integration as a top adoption barrier for buy‑side technology, with firms prioritising platforms that interoperate cleanly with existing OMS/PMS and data vendors rather than forcing wholesale replacement of core systems.

In due diligence, ask vendors to supply reference architectures and examples from clients that resemble your own set‑up. Probe what is genuinely “plug‑and‑play” versus what demands a custom integration project and internal engineering time.

Deployment models and scalability

Vendors generally offer some mix of cloud‑native SaaS, on‑premises, and hybrid deployments to satisfy data‑residency, latency, or policy requirements. The more “AI‑heavy” the workflows, the more important it is to test real‑world performance rather than accept slideware. The CFA Institute’s work on AI in investment management highlights that perceived model quality matters little if systems cannot operate reliably at production scale.

Key items to validate in a pilot:

- Scaling of intraday calculations for large, multi‑asset books

- Robust support for multiple currencies, tax regimes, and regulatory jurisdictions

- Documented SLAs, disaster‑recovery plans, and evidence of resilience testing

Supervisors have also begun to expect robust operational resilience for outsourced and cloud services, reflected in guidance from regulators such as the [UK Financial Conduct Authority](https://www.fca.org.uk/firms/operational-resilience). This is also where you see how well the platform’s risk, optimisation, and personalisation engines cope when exposed to your actual data volumes and complexity.

Fees and total cost of ownership (TCO)

Pricing models vary widely: some charge on assets under management, others on user seats, API usage, or separate data and compute tiers. The headline figure rarely tells the full story. As one chief operating officer at a global wealth manager put it, “The licence line is the cheapest part of any serious platform – the real cost lives in the change you have to make around it.”

Watch for:

- One‑off and indirect costs – implementation, data onboarding, bespoke integration, training, and internal change‑management effort

- Lock‑in risks – proprietary data models, scripting languages, or workflows that are hard to unwind

Market studies on wealth and asset‑management technology frequently show that integration and change‑management costs can exceed initial licence fees over a three‑ to five‑year horizon, particularly where vendors rely on proprietary languages or closed data schemas. A disciplined proof‑of‑value can keep this grounded. Define upfront how you will measure net benefit, for example:

- Basis‑point impact after costs

- Time to complete rebalances and checks

- Reduction in manual reconciliations or compliance errors

Pilot on a realistic subset of portfolios and constraints so you can test vendor claims under your actual operating conditions and align them with the sort of value drivers highlighted in independent fintech benchmarks such as the World Economic Forum’s work on AI in financial services.

Questions to standardise across your shortlist

To compare platforms fairly, put the same practical questions to each vendor:

- What is the full three‑year TCO under our expected usage and data patterns?

- How quickly can we reach a production‑grade pilot with real portfolios and controls?

- How much can we configure through settings rather than maintaining custom code?

Converging answers here will give you a far clearer sense of which AI platforms are operationally viable, not just functionally impressive, and mirrors the structured evaluation approaches recommended in independent assessments of investment‑technology procurement such as those from consultancies specialising in asset‑management transformation.

Conclusion and Next Steps

Across the platforms reviewed, the real differentiators are not the AI labels, but how well each system turns algorithms into a controlled, day‑to‑day investment engine. The strongest offerings combine robust portfolio construction under real‑world constraints, forward‑looking but explainable risk analytics, scalable personalisation and reporting, and integrations that actually work with your OMS, data stack, and governance processes.

There is no single “best” platform. The tools we have discussed suit different segments, data maturities and risk appetites. Your existing systems, the skills in your investment and risk teams, and your regulatory obligations will all shape which mix of configurability, automation and oversight is viable.

To move from reading to action:

- Turn the comparison checklist into 5–10 non‑negotiable requirements.

- Shortlist 3–4 platforms aligned to your segment (wealth, institutional, CIO office, or enterprise layer).

- Run a time‑boxed pilot with clear success metrics on performance, risk control, workflow impact and total cost before any wider roll‑out; this mirrors proof‑of‑concept approaches recommended in many [model risk governance frameworks](https://www.bis.org/publ/bcbs355.pdf) for complex analytics.

Above all, treat AI as a durable enhancement to your investment process, not a magic alpha machine. Prioritise platforms that make that enhancement transparent, controllable and auditable from day one, in line with the direction of travel in emerging AI and algorithmic governance expectations from major regulators.

Contact us today to learn more about how you and your team can make the most of AI in Data Analytics, Finance and Asset Management!

Frequently Asked Questions (FAQ)

No - managers mainly need to set use cases, guardrails, review points, and team norms.

Low-risk, high-friction tasks like drafting updates, summarising notes, inbox triage, and planning support.

For anything external-facing, sensitive, HR-related, financial, or involving commitments and decisions.

Require teams to verify outputs, explain reasoning, and build regular feedback and prompt-review routines.

Related Articles

Tips & Tricks

Tips & TricksAI Investing: The Future of Personal Wealth

AI investing gets thrown around as a buzzword, but it can mean very different things - from buying “AI stocks” to using AI tools inside the investment process. This article focuses on the practical middle ground: how robo-advisers, systematic trading tools, and AI research co-pilots support research, portfolio construction, monitoring, and reporting. It explains where AI adds real value (speed, scale, personalization, risk and compliance) and where it can go wrong (hype, opacity, weak controls, and scams).

L&D Insights

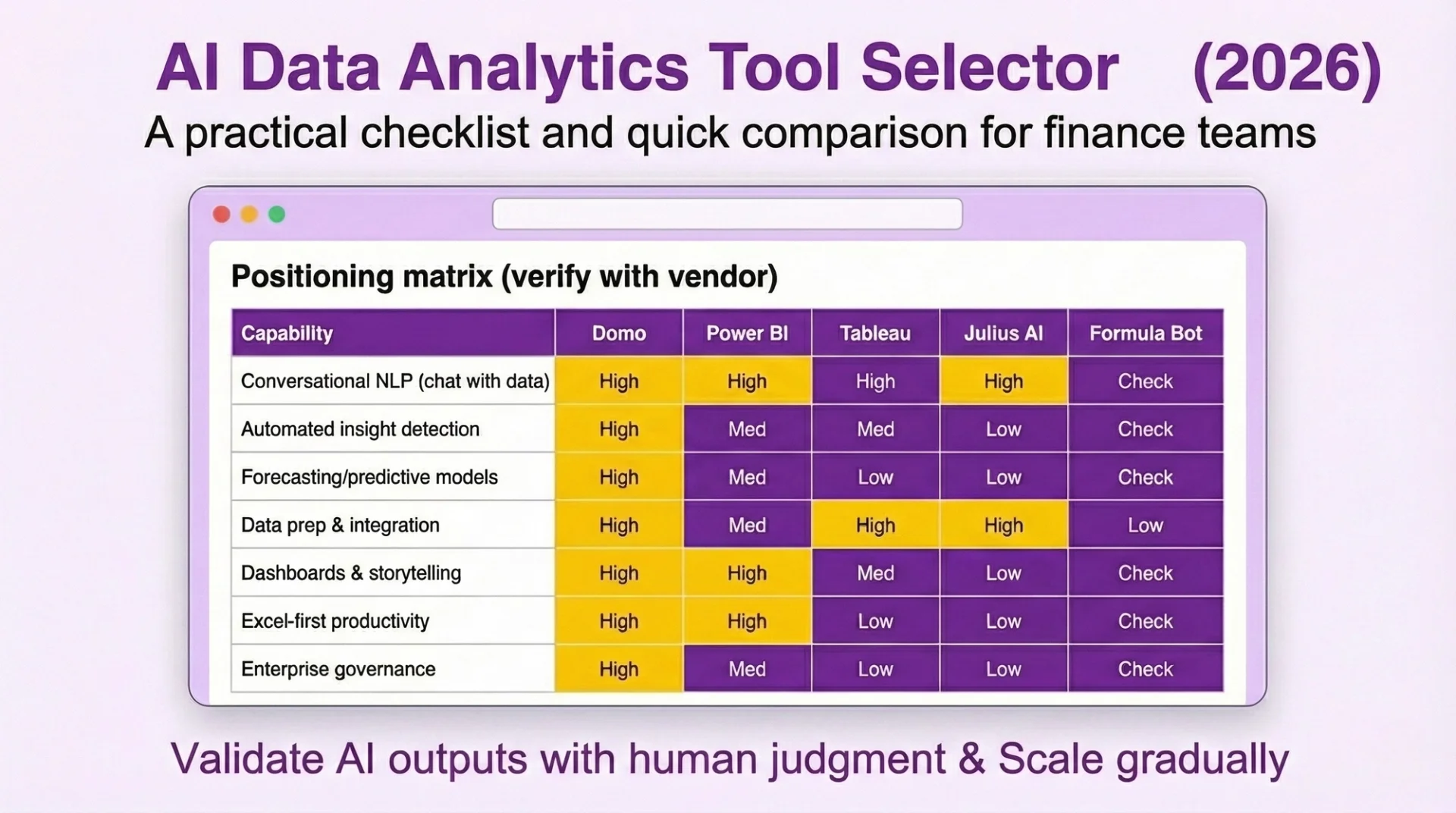

L&D InsightsBest AI Tools for Data Analytics in Finance 2026

AI is reshaping how finance teams analyse data - moving beyond manual spreadsheets to faster, smarter insights powered by machine learning and natural language. This blog post breaks down what AI data analytics is in 2026, the must-have features to look for, and how the “full-stack analyst” is emerging inside modern tools. You’ll also get a practical review of the best no-code AI analytics platforms for finance - plus tips to implement them confidently.

L&D Insights

L&D Insights